Clients frequently ask us for guidance on the best way to collect customer satisfaction feedback for their virtual assistants. For customer experience-minded companies it’s understandable why: ”Our customers love our customer care agents, we want to make sure they love our chatbot too!”. Along this line of thinking, it’s tempting to make a direct comparison between human-human interactions and human-chatbot interactions. If we view a chatbot as a sort of “digital employee”, it makes sense to try to evaluate it using the same metrics as other customer care representatives. For a number of reasons, however, this may not be the best approach. In fact, in almost all cases we would make the case for binary feedback instead. Why? Users are more likely to provide it and it provides clearer benchmarks for optimizing your bot.

What is CSAT?

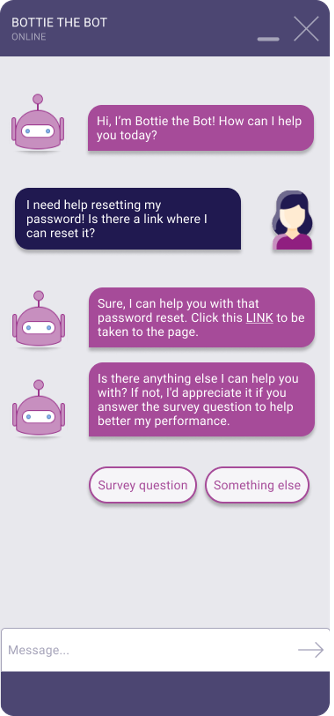

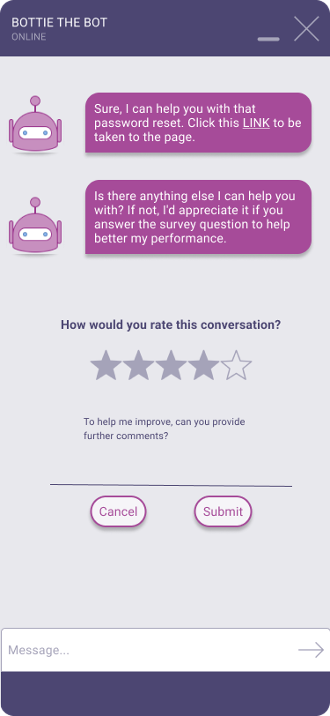

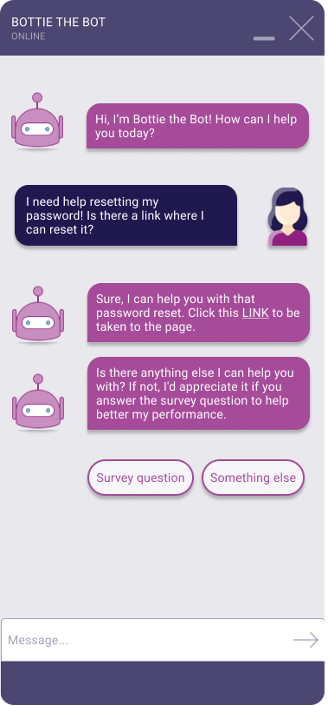

Customer satisfaction, or CSAT, is commonly measured through surveys where a customer is prompted to rate their experience on a scale of one to five. A score of one would denote that the customer is very unsatisfied with their experience, a five that they are very satisfied, and a three would indicate a neutral experience. For example, a CSAT survey implemented within your chatbot might look like the example below.

One challenge posed by this metric is that the average response rate for CSAT surveys is rather low, around 20%. This can also range drastically from 5% up to 60%. Factors such as how easy it is to respond, how long the survey takes to complete and how important the topic is to the customer all influence the likelihood the customer responds. Typically, customers are much more likely to rate their experience with a person than they are with a chatbot.

Improving your response rate

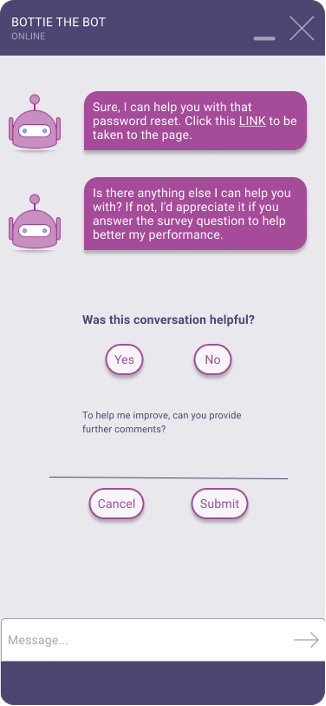

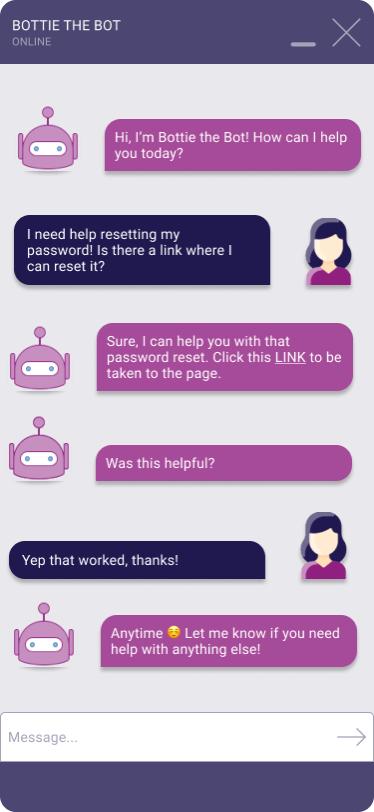

One of our clients was shocked to find that when they started measuring CSAT in their project, they only received a small fraction of the number of responses they were used to seeing for customer care agents. To improve their response rate, they tried prompting the survey in several different ways, both conversationally and through the UI. Despite these efforts, their response rate hovered around 10%. Ultimately, they decided to try simplifying their survey. They noticed that of the three questions posed by the survey, most customers were only answering the first. The client had also noticed that virtually no customers were assigning “in-between” scores of 2, 3 or 4. Because of these observations, they decided to reduce three questions to one and made that question binary rather than a scale of 1 to 5. Overnight, they saw their response rate jump from 10% to 25%.

To improve their response rate further, they could also consider reducing the steps required for the customer to provide feedback. For example, by allowing the user to provide feedback directly on the response bubble. The easier it is for the customer to provide feedback, the more likely they are to give a response.

Improving your bot’s customer feedback

Other questions we hear frequently from our clients are, “What CSAT scores should I expect for my chatbot?” and “What can we do to improve our chatbot’s CSAT scores?”. While there is no clear-cut answer to the first of these questions, we can offer the following piece of advice: if you intend to compare your chatbot’s scores to your live agents’, build your chatbot so that it can do (at least some of) what your agents can do.

Studies on customer satisfaction with chatbots have shown that by far the single greatest determinant of CSAT was the bot’s ability to resolve the customer’s problem. That is to say, the bot’s ability to actually do things. This can be achieved through integrations with backend systems that enable your bot to provide the customer with detailed support. A customer who receives a generic response to a complex issue is unlikely to rate their experience as positive.

Customer feedback for optimization

One of our clients observed this firsthand in their project. They found that areas where they consistently received negative feedback from users were those where they were lacking backend integrations. They assume, for example, that if a user asks the chatbot to update their password and the user successfully performs this action within the chat, that the chat was successful and that the user is satisfied. As a result, they decided to only conversationally prompt for customer feedback when providing the user with a generic response (for example, an FAQ article).

They use this feedback to determine where FAQs needed improved clarity and where it was worth investing in backend integrations. They prompt for feedback only where they expect users might be unsatisfied. This makes this client’s binary feedback score quite low (around 20%). Despite this, they consider this score to be perfectly acceptable. Why? They view their feedback as a tool for optimization and not as a traditional CSAT measurement.

CSAT Takeaways

Measuring customer feedback within your project can give you invaluable insights into how your customers perceive your bot as well as how it is performing. To make the most out of your customer feedback, we would recommend using a more “bot-centric” approach than traditional CSAT measures. If you must make direct comparisons between your chatbot and your agents, take those comparisons with a grain of salt. This is especially the case if your chatbot is capable of performing a fraction of the actions your agents can.