There’s no denying that LLMs, such as OpenAI’s GPT series, will have a huge part to play in the growing conversational AI landscape. There have been countless posts and articles about using ChatGPT for various tasks. There are several key capabilities of GPT that warrant further exploration, such as its ability to summarise, classify and generate text. Of these, the question arose – ‘could we use an LLM to effectively replace the traditional ML model that powers most intent-based chatbots’?

When we think of LLMs, we may consider a future where there are no real ‘intents’ expressly defined. It may be able to handle anything asked of it outside of a rigid, pre-planned structure. However, as of today, we’re focusing on where an LLM could fit in and enhance the current architecture. With that in mind, we adopted a loose scientific approach by comparing the LLM’s classification abilities with a real-life chatbot.

Firstly, we need to accept that no chatbot identifies intents perfectly, and so there is already an element of misclassification. Long utterances, multiple intents, and a host of other things can all lead to the user being taken down the wrong path. Much time is invested in identifying and reducing those types of conversations. Perhaps an LLM can help with that process?

For our benchmark study, we took data from a production customer service chatbot to see whether we could improve its intent recognition using LLMs. To achieve the benchmarking comparison, we took 400 random real-life user inputs of varying lengths and manually labelled those with the appropriate intents. Next, we ran those previously unseen utterances against the bot platform’s ML model – it was 60.5% accurate. Whilst this may not appear highly accurate, our chatbot solution makes heavy use of syntactical language rules as an extra layer on top of the machine learning model too. Another thing to consider is that human labelling is not infallible either and doing multiple labelling passes would reduce errors or biases there. For our small-scale experiment, we did only one labelling pass.

Summary results for regular ML model

9000 training questions covering 75 intents returning a 60.5% accuracy using the bot platform’s ML model

Prompt Design

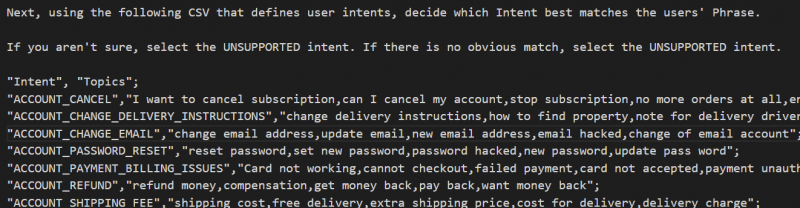

We wrote out our 75 intent names and added around 5 – 7 succinct ‘topics’ of what each intent was about. These instructions were passed in the prompt along with the user utterance for classification. A sample of those intent topic descriptions can be seen below.

We also included some guardrail prompts to ensure it only selected an intent from the list given, and returned ‘unsupported’ if not sure. There were also some rules around selecting certain intents as a priority over others.

The initial results were impressive, as it successfully predicted the intent for 64.5% of the utterances, beating the regular ML model.

Summary results for prompt design

64.5% accuracy using prompt design with GPT-3.5 with 375 ‘topic’ summaries covering 75 intents, with 4-6 topics defined per intent.

Thoughts on prompt design for classification

This method appears to be a very effective way to discern the user’s intent, but does have some drawbacks. With each utterance, we would need to provide the entire list of intents and their topic descriptions each time. It might only cost fractions of a dollar for each API call, but multiplied over thousands of conversations, it can add up to a very large number.

We also found it challenging to ‘review and improve’ the results. For example, if a particular user utterance failed, it wasn’t always obvious what to change amongst the topic descriptions. Whilst that’s also true with maintaining regular ML training phrases, the in-prompt method seemed more like guesswork than normal.

Also, the model cannot return a ‘confidence’ or ‘probability’ level for its prediction either. We found that any attempts to ask for a % confidence, or for a list of top 3 suggestions greatly reduces its accuracy.

Fine-tuning

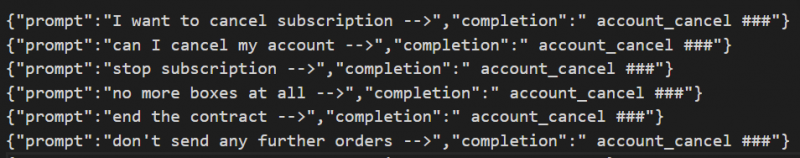

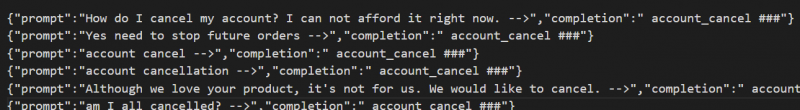

Fine tuning is a way of adding another layer of extra information to the pre-trained model so it can sort of ‘remember’ some facts. As we used that fine-tuning layer to classify a user input, we prepared the data with a pattern to tell the model to return (complete) our classification. At the time of writing, fine-tuning is only available for the base GPT-3 models – with Davinci being the most capable of those.

Initially, we used the topics that worked well in the in-prompt approach. Each of the 370 topics, and their corresponding intent name, were used to create a custom fine-tuned Davinci model. We can see some examples of the fine-tuning data below.

The result from our benchmarking test was 44.25% accuracy. This wasn’t too surprising because we hadn’t given the model much to ‘learn’ from in order to classify the intent of the user’s message. We also found that the model would return plausible, but fictitious, intent names that were in a similar format to the ones provided to it.

Summary results for fine-tuning topics

44.25% accuracy using a fine-tuned Ada model with 375 ‘topic’ summaries covering 75 intents, with 4-6 topics defined per intent.

As a second-stage test, we prepared all of the existing training data used for the ML model and created another fine-tuned GPT-3 model. This equated to just over 9000 training questions, so provided the model with many more real-life examples. Now, against our benchmark of 400 previously unseen utterances, it correctly predicted them 65% of the time. We also found that it didn’t invent new intent names. This accuracy increased to 70% when using a fine-tuned Davinci model.

Summary results for fine-tuning training phrases

65% accuracy using a fine-tuned Ada model with 9000 training phrases covering 55 intents

70% accuracy using a fine-tuned Davinci model with 9000 training phrases covering 55 intents

Thoughts on fine-tuning for classification

This approach requires preparation of data into a ‘prompt’ and ‘completion’ pattern. There is a one-off charge to create a fine-tuned model, based on the token usage for that particular model. Sending subsequent queries to that fine-tuned model has a higher token charge than using the regular GPT-3 base model.

One drawback we discovered was that we couldn’t easily add in those extra ‘rules’ prior to classification of the user’s utterance. There is a relatively new prompting structure for separating instructions from inputs, called ChatML, but this does not appear to be supported yet for the base GPT-3 models.

Embedding

The last approach is called ‘embedding’. It’s a method of converting words and sentences to numbers (vectors) for comparing similarity. Embedding vectors are generally used for semantic searching and for retrieving relevant answers from large corpuses. The process involves creating an embedding file from blocks of text. In most cases, OpenAI suggest using their fastest and cheapest model, Ada, for this purpose.

When creating embeddings, it essentially creates a text file of numbers (vectors) that can be downloaded and utilized outside of the OpenAI infrastructure. If it’s very large, it may be necessary to host it centrally on a dedicated vector database.

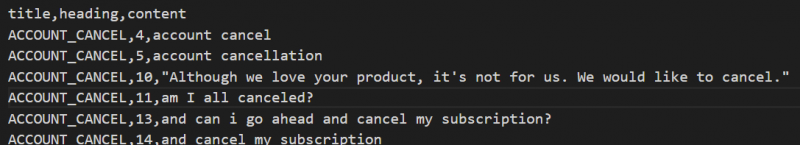

We opted to embed our training data of around 9000 utterances. So each training phrase was embedded, then each of the 400 test utterances were compared to those embeddings for similarity.

The result was a 60% accuracy which was practically the same as the regular ML model itself.

Summary results for embedding

60% accuracy using embeddings using Ada model with 9000 training phrases covering 55 intents

Thoughts on embeddings for classification

Once the custom data is embedded, having a file that doesn’t expire or incur a cost to use is appealing. However, the phrase being compared to the embedded data must first be embedded using the same model. Embedding seems a robust method to get a factual response returned, based on semantic similarity. It won’t ‘invent’ new intents as it faithfully returns whatever text it was provided with. A similarity score is returned against the most likely intents. However, the numbers we observed were very close together, sometimes only separated by 0.001 on a scale of 0 to 1.

Of all 3 approaches, this method seemed the most complex and didn’t produce better results than adding simple topics to the prompt itself.

Conclusion

Whilst our tests were on a limited scale they did shine a light on how LLMs might be suited for intent classification. To better evaluate the approaches, let’s return to the original question ‘could we use an LLM to effectively replace a traditional ML model that powers most intent-based chatbots?’

Fine-tuning provided the best accuracy at 70.5%, which is 10% above the regular ML model. However, this must be balanced against the limitations of maintaining the training phrases and not being able to implement intent ‘priority’ rules or a ‘fallback’ response. It’s also worth noting that the Davinci model took 2 hours to train, at a cost of $21.

Embedding approaches provided results generally in-line with the accuracy of a traditional ML model, but they still required a large set of curated training phrases. From our tests, they didn’t seem suited for better intent recognition or an improved workflow. In fact, making changes to the training questions would take a lot longer than retraining a ‘built-in’ ML model on the bot platform itself.

However, we can see a use case for employing the prompt design approach. It’s relatively quick to create a list of intents with no need to use any additional data or APIs. It could really accelerate building a first iteration chatbot, or perhaps a PoC or demo. There’s no requirement to have a lot of training data up-front and anyone can pad out the ‘topics’ to describe the purpose of the intents. For this scenario, we think that fewer intents would most likely result in higher accuracy, and the low usage would make it a cost effective option. The ability to ‘direct’ the classification by way of the guardrail ‘rules’ is also highly beneficial.

It is also worth considering that any chatbot using an external LLM as the intent classification ‘manager’ would greatly suffer if the API was unavailable for any length of time. In this article we have focused solely on OpenAI/GPT but naturally other LLMs are available that might have different reliability factors, or they may be differently suited to this task than those we have tested.

We also noted that in many instances across all of the tests, where the intent was misclassified, the proposed intent was very plausible and rational. For example, the utterances contained multiple intents or they indicated a particular intent without expressly stating it – something that humans are (currently) superior at!